Table of Contents

Preface

When you’re dealing with SSD storage, chances are, a single network interface is just not enough. No, not even 10GBE is. In fact, with our current 24 SSD array we actually need at least 70 Gbps (yes, you’ve read it right) to fully utilize it. I can’t even put as many cards in the box, so I’ll settle with 60: there’s 3 PCI-E 8x slots so I can add 3 of the dual-port Intel X540-T2s.

Link Aggregation

At this point you obviously need to apply some technique to make SAN traffic go through all these NICs instead of just one. On FreeBSD (and thus FreeNAS) there’s lagg which is TL;DR a link aggregation implementation using the same standard LACP that Windows’ NIC teaming uses. That’s all great, except LACP only helps when you’re dealing with many clients. That means a single stream will only utilize a single NICs total bandwidth at max, then you get 10 clients and your problem with underutilization is solved. This is why LA is great for a NAS scenario where you either have a shitton of clients or the usage is so light that it doesn’t matter if storage access is slightly slower.

iSCSI Multipath

Cool, but what if I have, say, 2 Hyper-V boxes in cluster, each with 3 NICs, that will use my 6 NICs on the SAN box? Yes, you got that right, they’d use 20 Gbps (10 Gbps each) of the 60 available. The rest is flushed down the toilet. So that’s why you also have iSCSI multipath. In this case iSCSI traffic can go through as many NICs as you want, you just have to configure both the server (target in iSCSI terms) and the clients (initiators) correctly.

Windows

In this regard, both Windows Server and FreeNAS seems quite braindamaged and in this case, FreeNAS is prolly the winner (or loser actually). On Windows, the target configuration is pretty simple: select the appropriate NICs to be used in Server Manager. Of course there always has to be a bug. Naturally, you want the iSCSI target service to be started automatically, and this is even the default setting. But after every reboot, you’ll get something like this with multiple NICs:

The Microsoft iSCSI Target Server service could not bind to network address 192.168.8.6, port 3260. The operation failed with error code 10049. Ensure that no other application is using this port.

No shit, it’s being used by another NIC, so use another port. Solution? Make the service start manually, and start it by hand after every reboot, it’ll work just fine. You don’t really do automatic reboots on a server anyway, so this is a tolerable compromise.

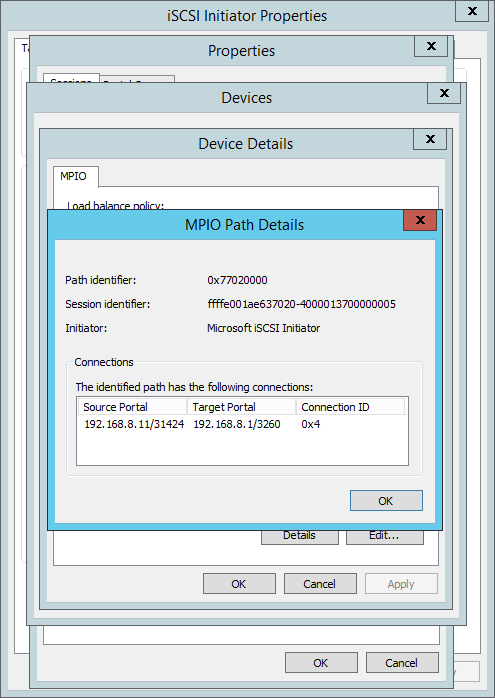

Anyhow, the initiator configuration is where things get really ugly. You need to add “sessions” for every path. That means if your initiator has 3 NICs and the server has 6, you have to add 18 sessions per initiator (36 in total). Why can’t this be automatic? Furthermore, the GUI is so freakin’ horrible, it has at least 4 levels of popups for these and it’s by no means easy to figure out or see through.

Yes, this is what it looks like, really. And the best part is that it allows you to add the SAME session as many times as you want, and to figure that out you have to check and memorize each and every session through these 4 layers of popups. Atrocious.

But even though MPIO (Multipath I/O) is a GUI nightmare, at least it works. I’ve managed to push half of the bandwidth down the lane with 4 NICs on the initiator and 7 on the target (yes, I sacrificed the board ports for testing). Expect benchmark numbers later.

FreeNAS

On FreeNAS you can also use multipath, but networking gets in the way. They consider having multiple NICs on the same subnet broken networking. WTF?

This means I should put each of my target NICs on a different subnet, then hardcode all my initiators on a random subset of these subnets. Most illogical.

Of course, I could use static DHCP leasing and FreeNAS would accept that “broken networking” without a word, which is particularly ironic since FreeNAS uses DHCP on all NICs by default – but I consider it really bad practice since if your Hyper-V SAN is down, it’s quite likely your DHCP servers are down, too (except if you’re using a router or something, which we don’t).

Another option is to just modify this setting directly in FreeNAS’ SQLite database and again, it’d be happily accepting it as valid configuration because it’s the web UI that won’t let me do this. Again, this sounds like an awful hack.

So long story short, on FreeNAS if you want sane iSCSI multipath, you’re out of luck. It’s doable, but… man. For this reason, I’ve decided to stick with Storage Spaces for SAN, but for our NAS (SMB) needs I’ll prolly ditch it in favor of FreeNAS eventually, both because it spares me a Windows Server license and because I can use parity schemes with RAID which gives me so much more disk space.

Anyway, enough of my rants for today, take care!